The End of Deploy and Pray

It's just over three years since the launch of ChatGPT. And enterprises are asking the question they should have asked earlier: have we been part of yet another hype cycle?

Foundational models have evolved to become more powerful. Yet in the enterprise context - implementations continue to take time, get derailed, and when they do get to production, newer challenges around ROI and governance start popping up.

The last few years have been about promise. Models will get better. Hallucinations will fix themselves. GPT-5.1, Gemini 3, Claude 5 are around the corner. Move fast or you'll miss the bus - that's the message boards sent to their CEOs. And they did. Moved fast. Billions got spent. Pilots got launched. The models did get better. But the problem didn't go away.

Here's what nobody wanted to say out loud for the longest time: Everything an LLM does is hallucination. It's doing the same thing every time - pattern matching, probability. When the output matches your expectation, you call it intelligence. When it doesn't, you call it hallucination. Same mechanism. Different luck. You can't build compliance systems on luck.

Between model releases, newer jargons popped up. Fine-tune. RAG. Prompt engineer harder. Add evals. Add guardrails. The belief underneath stayed the same: we can prompt our way to reliability.

Key Distinction

No, you can't. Not in healthcare. Not in financial services. Not anywhere compliance isn't optional. Models, architecturally, were never designed to solve this problem. The hype made us believe they would. They were never meant to.

Everybody tries to solve the problem with LLMs alone. It's like trying to get from point A to point B with just gasoline - no engine, no car, just fuel and a spark. Sure, it might combust. But you're not going anywhere predictable. And because prompting hasn't worked, the strategy has shifted to praying.

Deploy and pray. That's not a criticism - that's the actual strategy. I've sat in rooms where this was the plan. Ship it. Hope it holds.

The message beneath all of this has been consistent: Enterprise systems have been built on determinism. The foundation of most agentic systems is probabilistic. Even at 95% accuracy, you're dealing with a probability. A maybe. And because the golden pot at the end of the rainbow is 50% headcount cuts, profit pools previously unimagined—you must leverage it.

But you can't have it all. Pick innovation or pick compliance. That's the trade-off. CIOs believed it. Legal teams enforced it. And everyone accepted this as just how it works.

It doesn't have to be this way.

Enterprises can't afford this trade-off between governance and innovation. And here's the thing - it was never a real trade-off. It’s a missing layer. Nobody built the infrastructure to govern agents across their lifecycle. So, enterprises were forced to choose. Or pray. With the right infrastructure, you can finally have both.

To be clear - infrastructure in this context doesn't mean better prompts. Or bigger models. Or another eval framework.

A single source of truth cannot be 20,000 chunks of text embedded in a vector database. That's not governance- that's a false sense of security. Guardrails need to refer to validated truth. Human-reviewed. Structured. Deterministic.

Lessons from building symbolic AI

What we're talking about is a different foundation altogether. A neuro-symbolic approach. The best of both worlds.

I spent the early part of my career in symbolic AI. Forty years ago, symbolic AI alone couldn't scale - too many rules, too brittle. Today, neural alone can't govern - too probabilistic, too opaque.

The answer, we believe is in taking the middle path.

Domain knowledge via ontologies. Knowledge graphs for determinism. The power of foundational models to understand enterprise language at scale. That's the engine. The LLM is the fuel. The intelligence isn't in the model - it's in your knowledge structure - How knowledge is organized. How concepts connect. What meaning you bring to the data. Get that right, and the LLM becomes useful. Get it wrong, and you're beyond to where you started – down the dark hole. That's what makes AI auditable. That's what makes compliance provable.

That's the path we have followed to take pilots to production. Organize knowledge before you build. Validate before you deploy. Enforce while agents run. Compliance check after every run.

Governance isn't a gate you add at the end. It's infrastructure across the lifecycle. It is The Enterprise Trust Layer. Built in. Not bolted on. The end of deploy and pray. The reliability of deploy and prove.

The end of deploy and pray. The reliability of deploy and prove.

The end of deploy and pray. The reliability of deploy and prove.

If you're building agents for regulated industries, and you're done choosing between hype and compliance, this is the shift.

We're publishing the principles, the architecture, and the lessons.

Follow along.

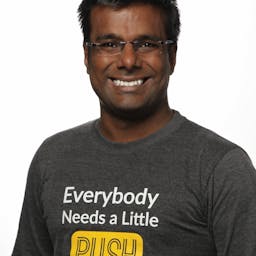

Vivek Khandelwal

2X founder who has built multiple companies in the last 15 years. He bootstrapped iZooto to multi-millons in revenue. He graduated from IIT Bombay and has deep experience across product marketing, and GTM strategy. Mentors early-stage startups at Upekkha, and SaaSBoomi's SGx program. At CogniSwitch, he leads all things Marketing, Business Development and partnerships.